Conversational User Interface and Image Recognition

Graduate Studio

Studies

(2020)

Graduate Studio

Studies

(2020)

“As we enter 2020, human interaction through and with computers continues to evolve beyond GUI interfaces. Machine learning now enables computers to learn about the world and make predictions using structured and unstructured data. We can speak to machines—and machines can speak back. We can gesture to devices, expressing emotion and intent, and machines can respond meaningfully. We look to computers not just for interaction, but for companionship.

Research in machine learning has led to two particular technologies—Natural Language Processing (NLP) and Image Recognition—that enrich this sense of companionship. NLP empowers machines to understand and communicate via human language, while Image Recognition allows machines to “see.” We presently interact with computers using methods that—until now—we have only experienced via other biological, sentient beings. Our relationship with machines has fundamentally changed.

What do these evolving technologies and changing relationships mean for the designer? How might we map out companionship between human and machine? What dangers must we address? What destructive ideologies must we reveal? What possibilities for a better future might we explore and prototype? Ultimately, how might we ensure that machines uphold human values, rather than humans converting to machine values? This semester, we will take on this research topic together with a particular focus on healthcare.”

— Helen Armstrong, GD503 SP20

Research in machine learning has led to two particular technologies—Natural Language Processing (NLP) and Image Recognition—that enrich this sense of companionship. NLP empowers machines to understand and communicate via human language, while Image Recognition allows machines to “see.” We presently interact with computers using methods that—until now—we have only experienced via other biological, sentient beings. Our relationship with machines has fundamentally changed.

What do these evolving technologies and changing relationships mean for the designer? How might we map out companionship between human and machine? What dangers must we address? What destructive ideologies must we reveal? What possibilities for a better future might we explore and prototype? Ultimately, how might we ensure that machines uphold human values, rather than humans converting to machine values? This semester, we will take on this research topic together with a particular focus on healthcare.”

— Helen Armstrong, GD503 SP20

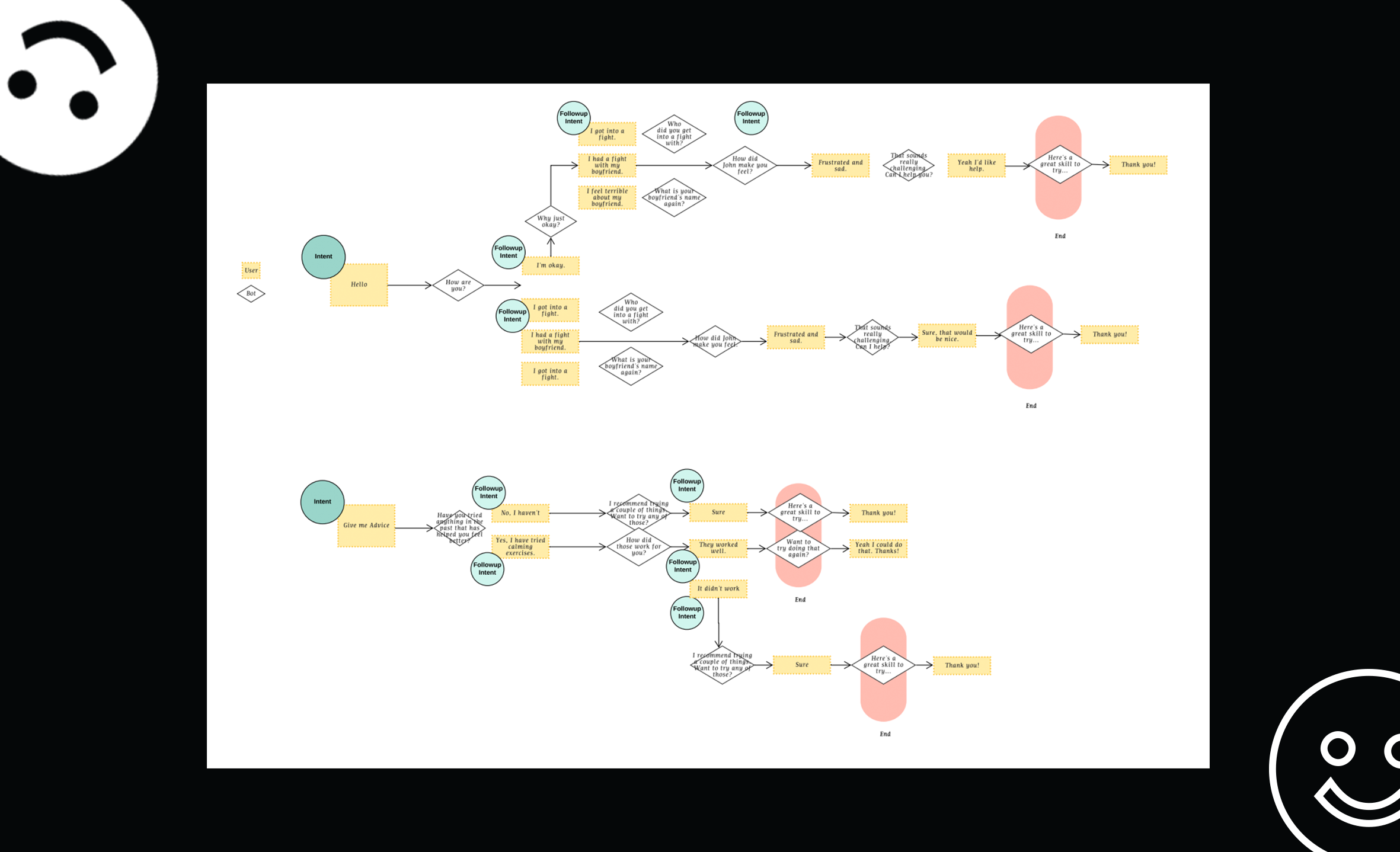

Study 01

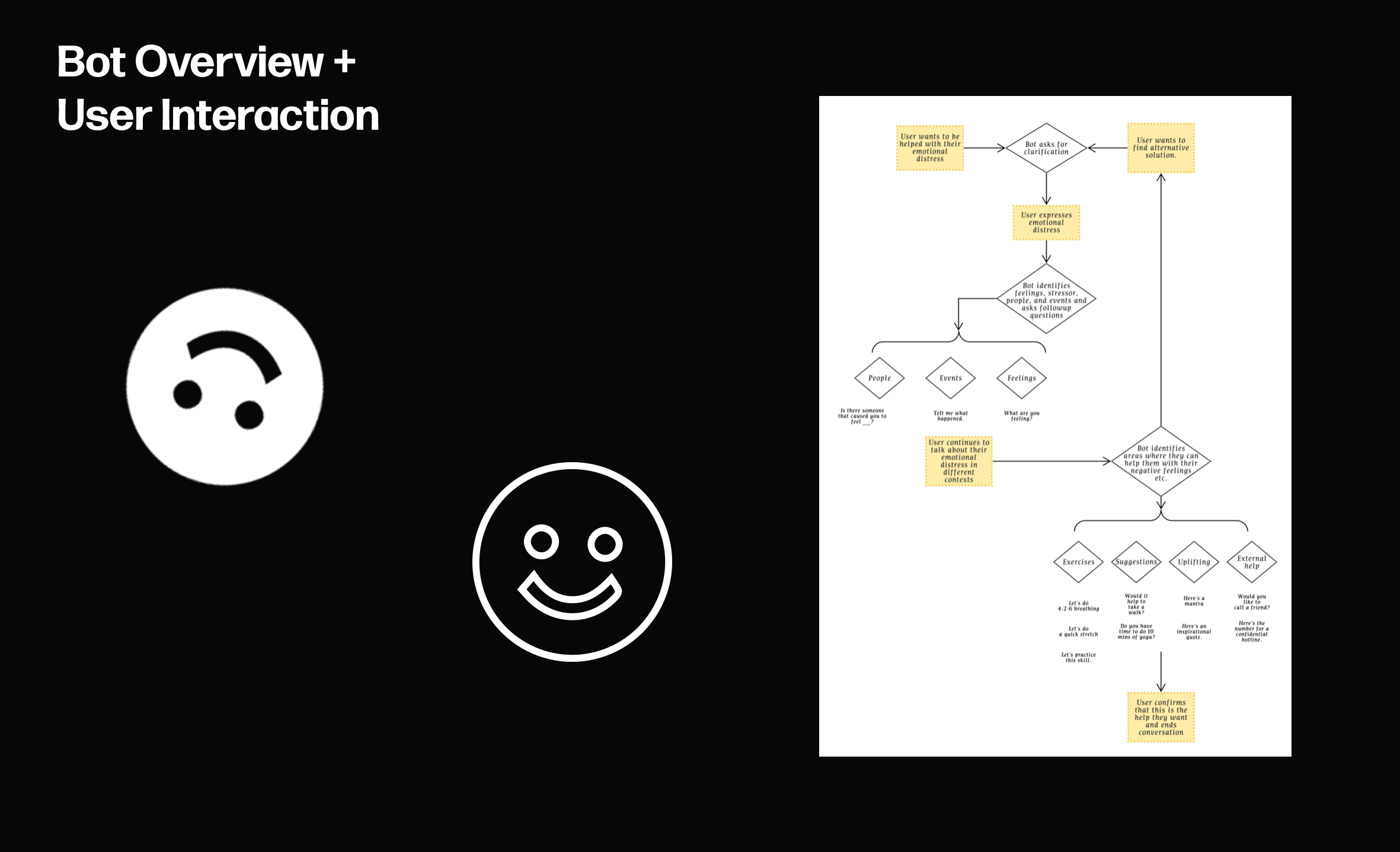

Study 01 looks at the basics of designing

conversational user interfaces (CUI). Understanding how to write and program intents,

sub-intents, and entities was key to this project’s success.

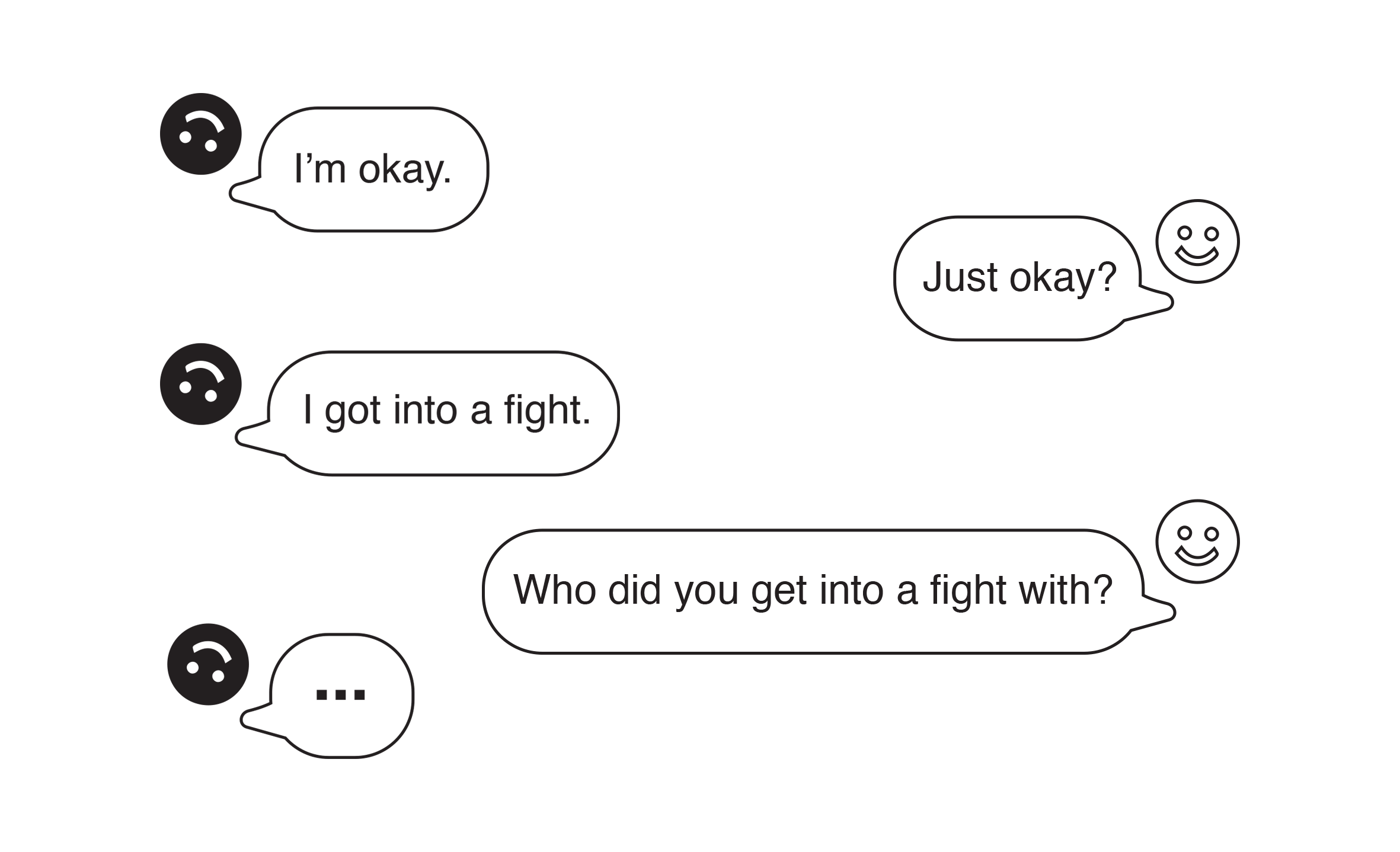

Final Product. Wendy the Calming Bot. Prompt. Design a functional CUI in Dialogflow. Outcome. Users felt heard and listened to, however, the chat bot’s final advice could have been more specific. Challenges. Limited time to explore all conversational workflows. Process. Brainstorm / Interview Users / Form Personality / Bot Script / Overview Diagram / Scenario Diagram / Create Intents, Sub-Intents, Entities / Test Bot with Users and Refine.

Final Product. Wendy the Calming Bot. Prompt. Design a functional CUI in Dialogflow. Outcome. Users felt heard and listened to, however, the chat bot’s final advice could have been more specific. Challenges. Limited time to explore all conversational workflows. Process. Brainstorm / Interview Users / Form Personality / Bot Script / Overview Diagram / Scenario Diagram / Create Intents, Sub-Intents, Entities / Test Bot with Users and Refine.

1 Build chatbots with Dialogflow <https://developers.google.com/learn/pathways/chatbots-dialogflow>

2 Bot overview + user interaction pdf <https://drive.google.com/file/d/1VF48VfBL9Gqit4UQTWAUs2rHl6a77reG/view?usp=sharing>

3 Scenario pdf <https://drive.google.com/file/d/1ZO7109JhudWCADZT3ubVI_oI2zefGUEo/view?usp=sharing>

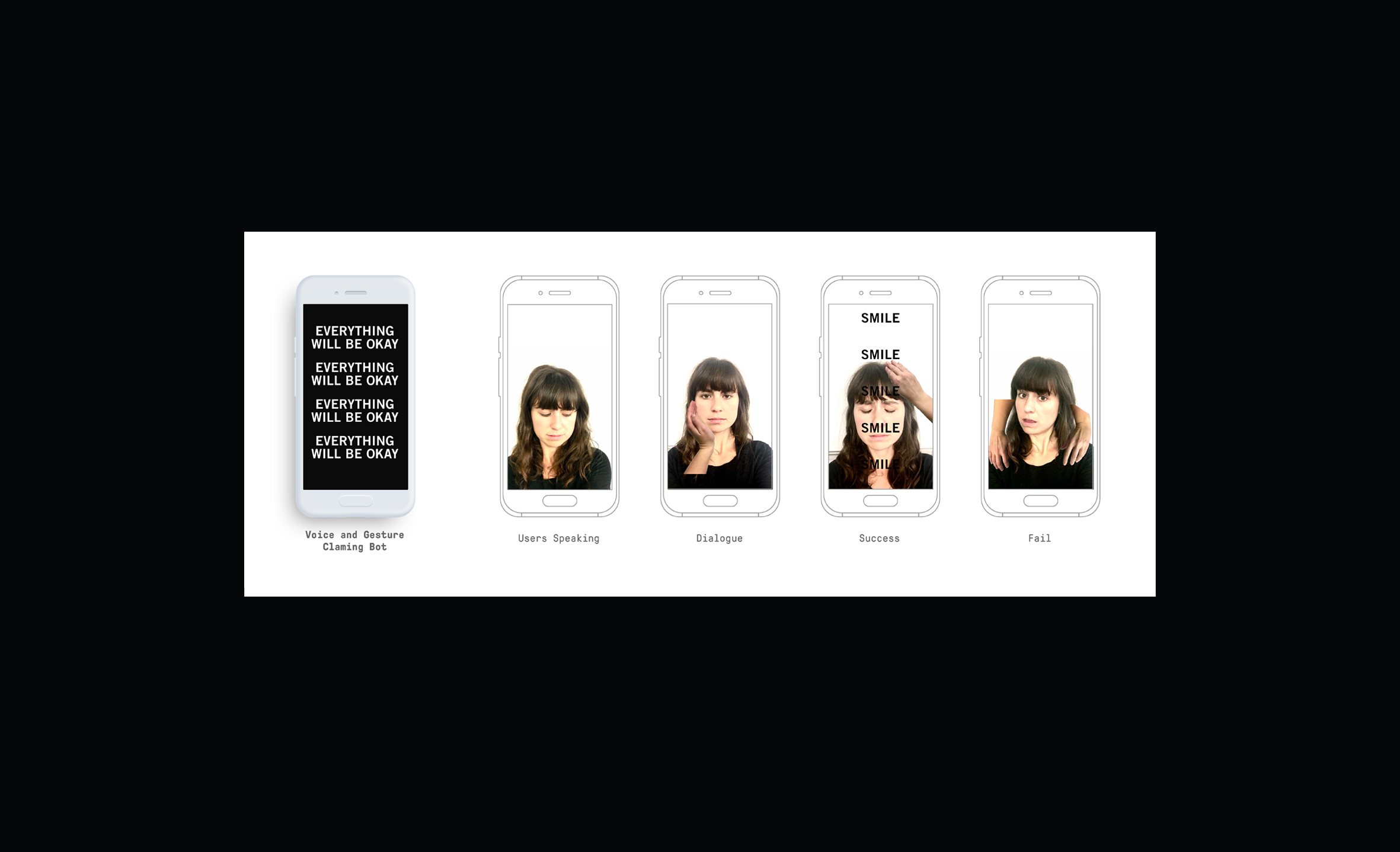

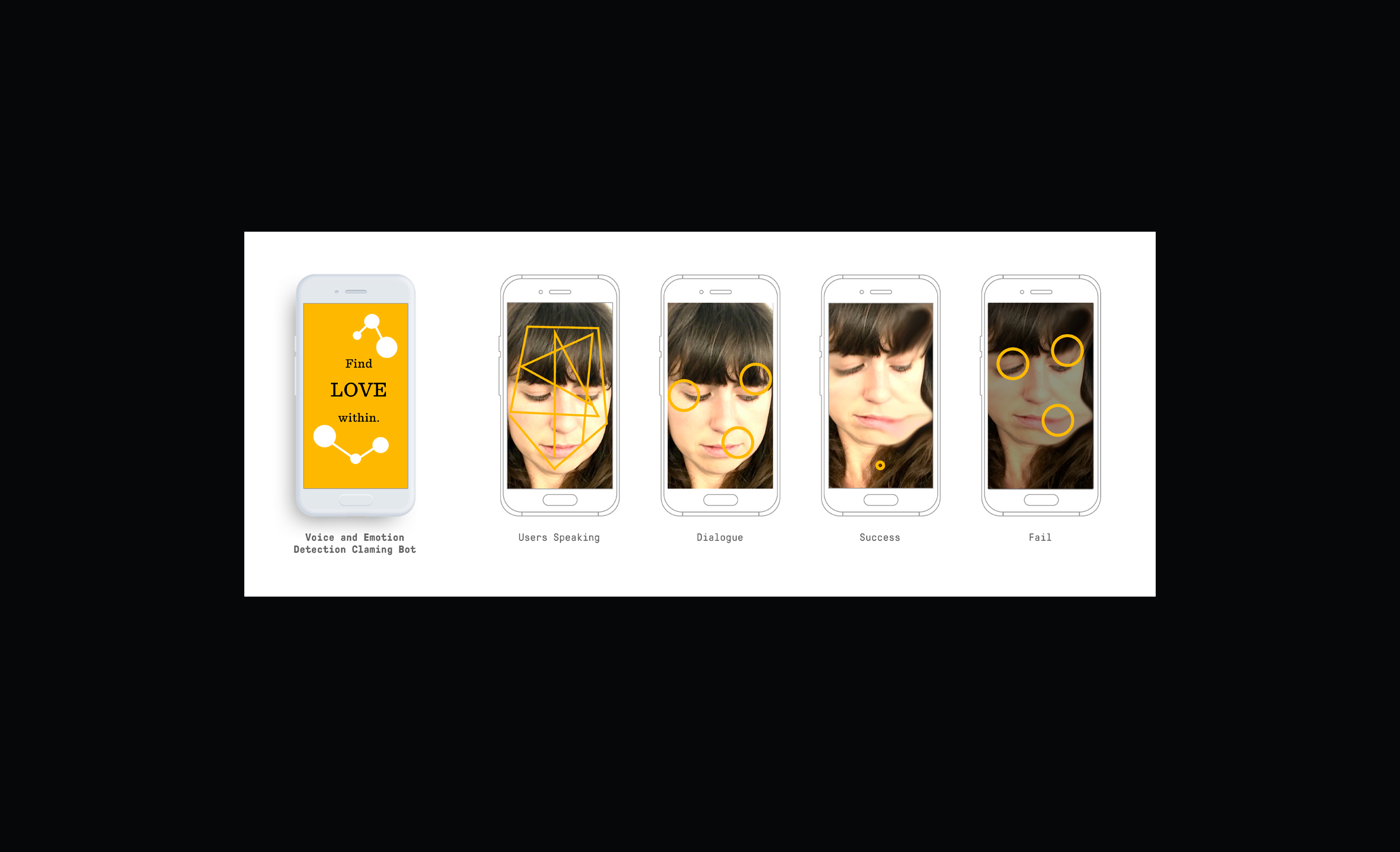

Study 02

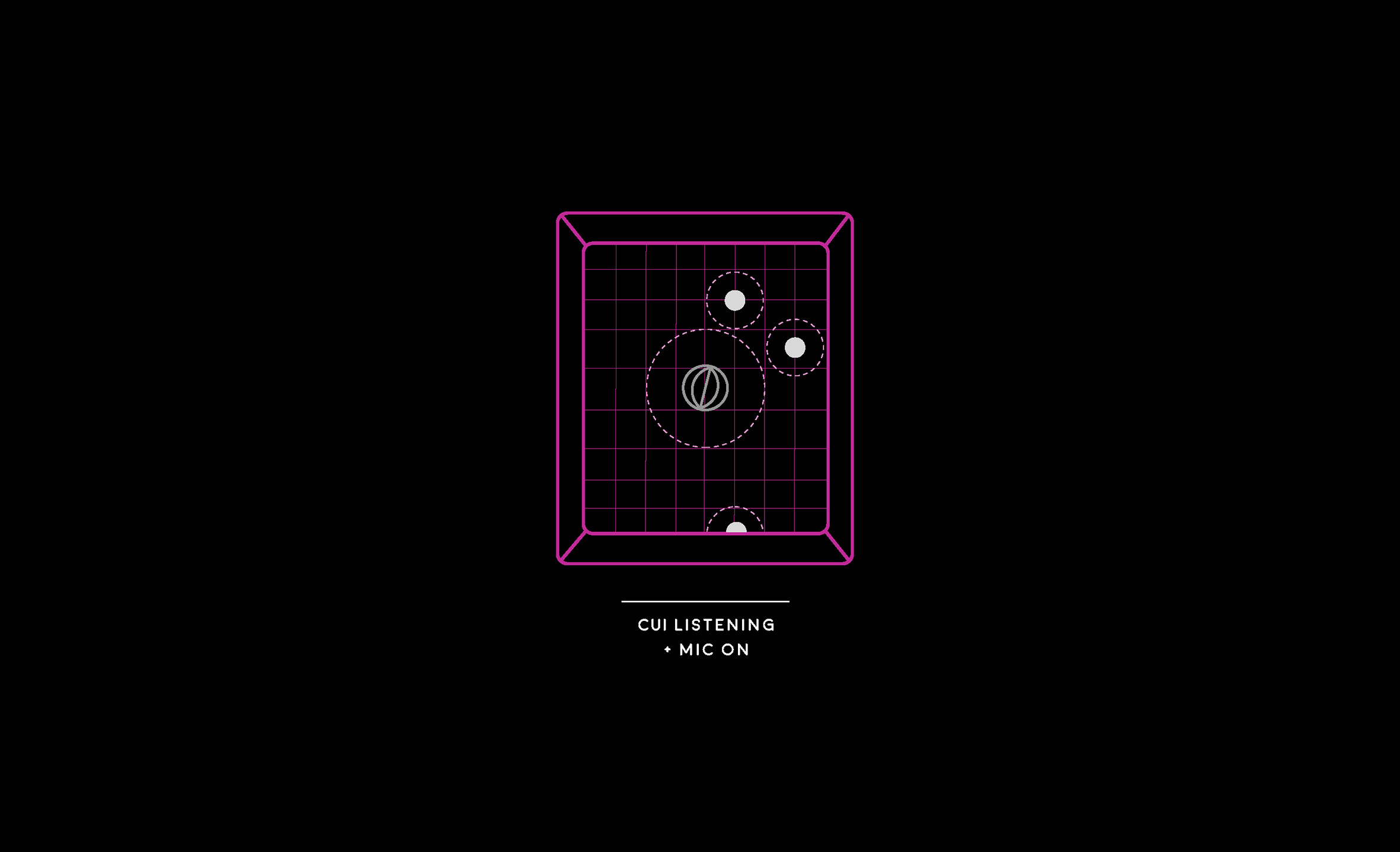

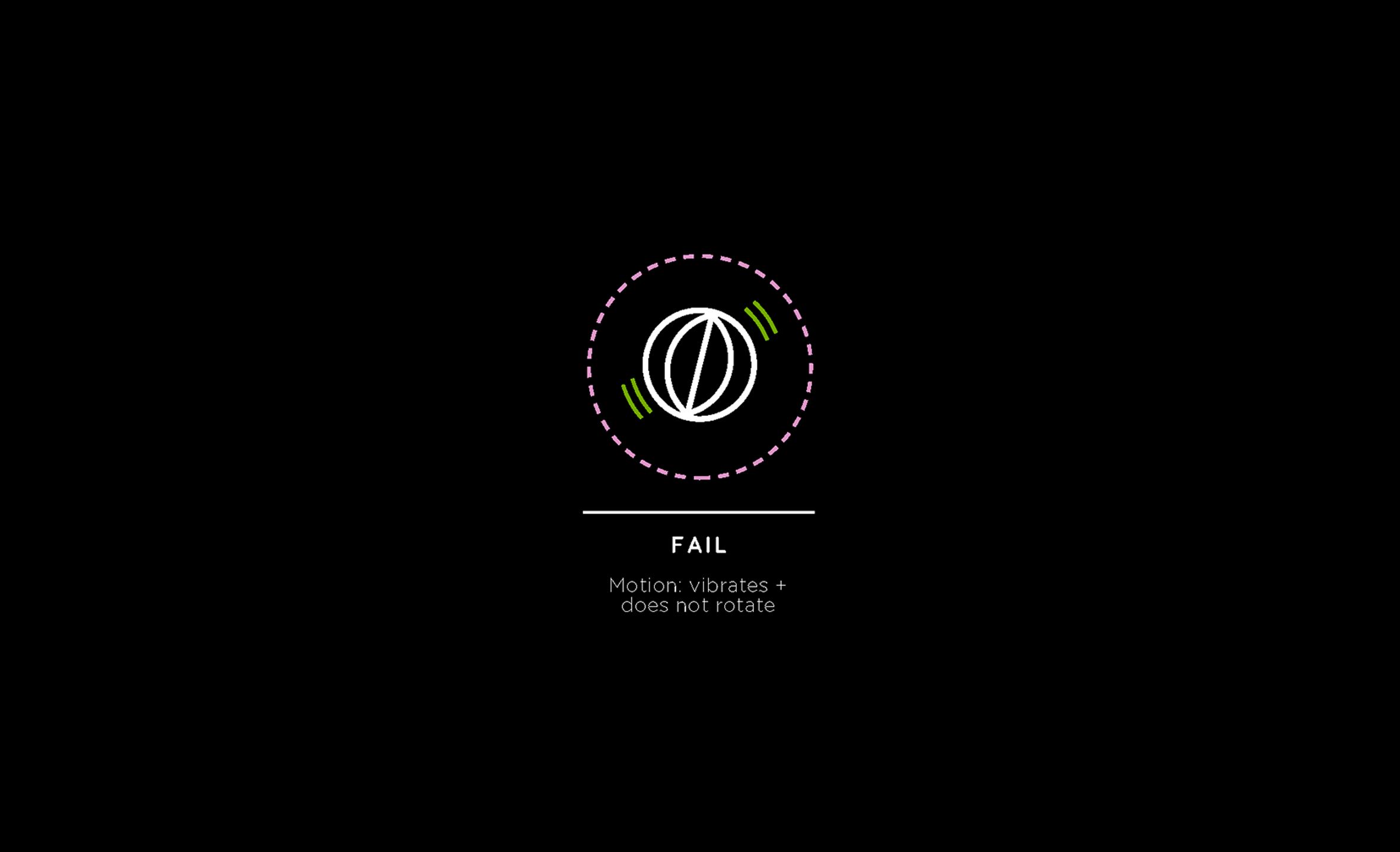

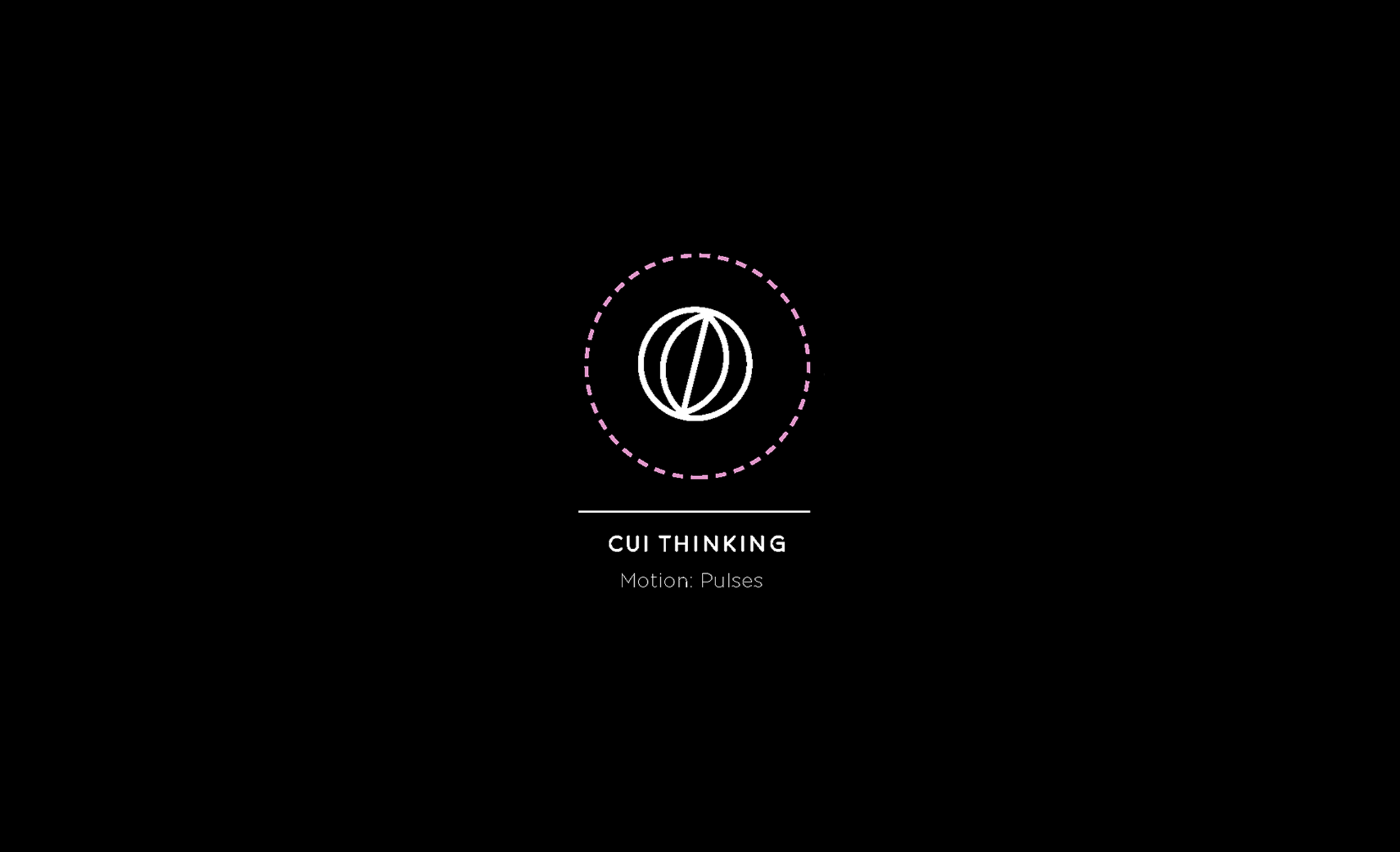

Study 02 looks at how we might visualize the CUI. Three different directions were explored visualizing four different states of interaction between the user and the bot.

Final Product. Calming Bot on iPhone. Prompt. Push the visual range of what a calming-focused CUI might look like in use. Three Directions Explored. Voice Calming Bot / Voice and Gesture Calming Bot / Voice and Emotion Detection Calming Bot. Four Visual Dialog States. User Speaking / Dialogue / Success / Fail. Process. Rapid Prototyping / Present for Feedback.

Final Product. Calming Bot on iPhone. Prompt. Push the visual range of what a calming-focused CUI might look like in use. Three Directions Explored. Voice Calming Bot / Voice and Gesture Calming Bot / Voice and Emotion Detection Calming Bot. Four Visual Dialog States. User Speaking / Dialogue / Success / Fail. Process. Rapid Prototyping / Present for Feedback.

1 Calming bot Hi-fi prototypes pdf <https://drive.google.com/file/d/1P2j0ihkf-RNKnSEi-sVa_ZlVKMNZGWlQ/view?usp=sharing>

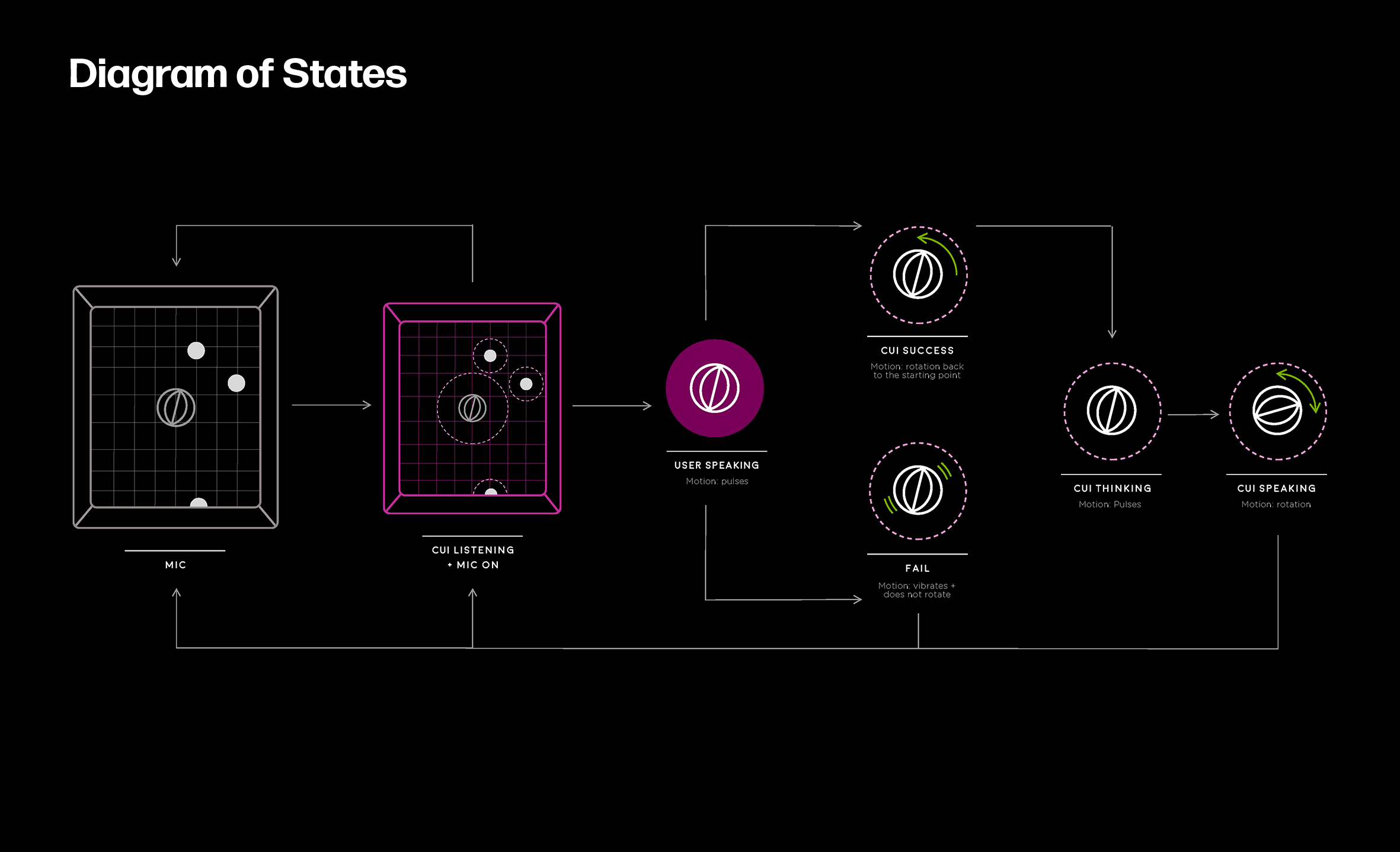

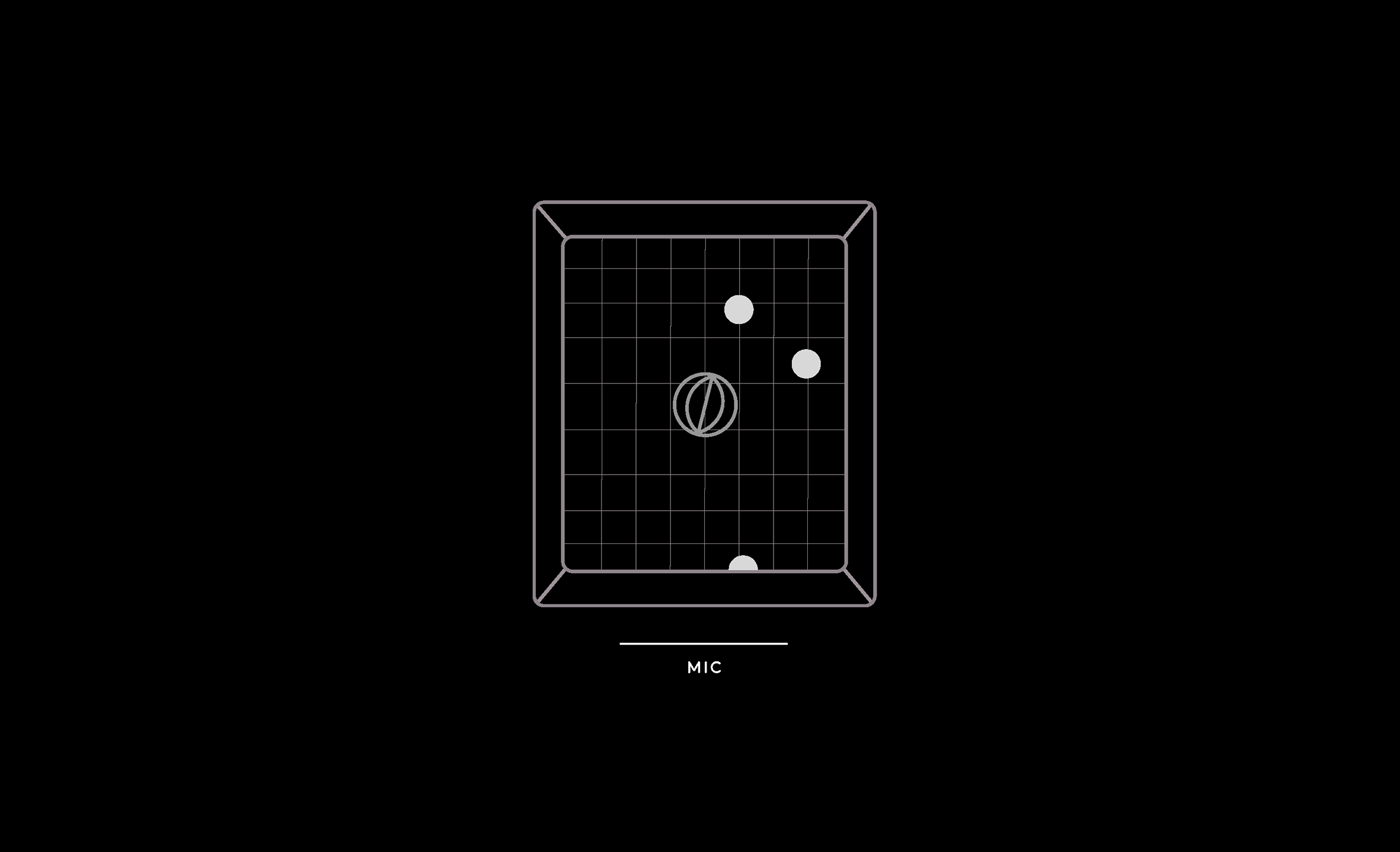

Study 03

Study 03 illustrates a possible positive relationship between humans and machine learning technology–Natural Language Processing (NLP) and Image Recognition.

Final Product. Microaggression Detection and Correction CUI on an Apple Watch. Prompt. Explore the implications of Image Recognition combined with CUI. How might we ensure that machines uphold human values, rather than humans converting to machine values? Visual Inspiration. Perspective of an observer looking down onto a dance floor.

Final Product. Microaggression Detection and Correction CUI on an Apple Watch. Prompt. Explore the implications of Image Recognition combined with CUI. How might we ensure that machines uphold human values, rather than humans converting to machine values? Visual Inspiration. Perspective of an observer looking down onto a dance floor.

1 Diagram of states pdf <https://drive.google.com/file/d/1Uw6JgA2olDzKdKErf_tdO015VCR5ruGS/view?usp=sharing>

2 Yes& blog post <https://academics.design.ncsu.edu/yesand/2020/02/16/dependencui/>

2 Yes& blog post <https://academics.design.ncsu.edu/yesand/2020/02/16/dependencui/>